|

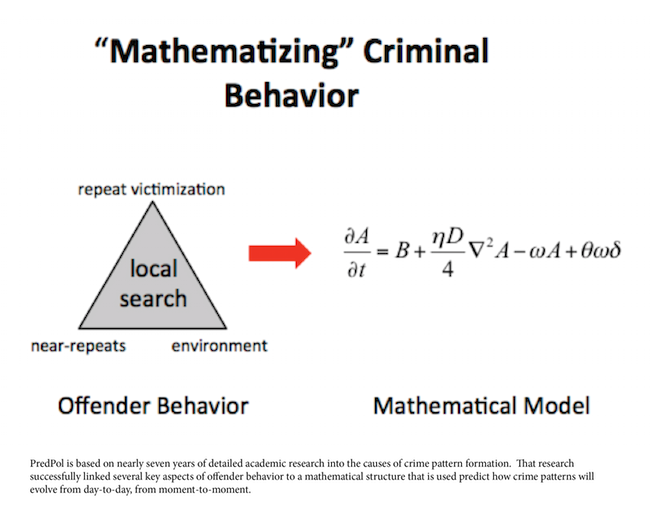

In 2018, a group of students in the University of Virginia’s School of Data Science embarked on a bold research project to solve a tough question: Could they use machine learning to figure out who was misbehaving on Wikipedia? The students created a machine learning algorithm that studied the behavior of users who’d been blocked by the site and those who hadn’t. The algorithm then processed that data to predict which unblocked users might be deserving of a block. UVA School of Data Science’s Wikimedian-in-Residence Lane Rasberry explained that a machine learning algorithm like this could outperform 1,000 human volunteers, each contributing 100 hours of civility patrolling per year, by running for only ten hours. So what did the data scientists do with this magical, predictive algorithm they’d created? “We immediately threw all of it away,” said Rasberry. “We imagined that this would be a multi-year project but the initial results showed high predictive power, so we paused until we could plan how to use it ethically,” Rasberry wrote. “As it turned out, the technical data science is easy, but the social aspect of having an algorithm pass judgment on human behavior is much more difficult to manage. It would be inexpensive and easy for anyone to recreate the tool using this research precedent, but producing ethical guidance to prevent the tool from misuse is much more difficult.” As Rasberry explains, there’s a dark side to this kind of data-driven “predictive policing.” Data-driven algorithms and other forms of machine learning can grow far beyond what their human creators envisioned, or even comprehended. Tesla executive Elon Musk, no stranger to controversy, famously referred to artificial intelligence as “summoning the demon.” That “demon” is alive at PredPol, a predictive policing company that contracts with local police departments to provide surveillance recommendations. The company transitioned to the name Geolitica on March 2, 2021, but this article will refer to its original name and purpose: to “analyz[e] historical data [in order to]... help better position patrol officers to prevent crime before it occurs.” PredPol is part of a disturbing trend of using artificial intelligence for policing. Other iterations are its predictive policing bedfellow Palantir and the controversial recidivism predictor tool COMPAS. “Crime isn’t as random as you may think,” reads PredPol’s website. “It follows a pattern.” Of course, no one intends to create a monster. As The Atlantic reported, predictive policing started out as one of Time Magazine’s 50 best inventions of 2011. As its website explains, PredPol uses crime data, such as location, time, and type of crime, to give officers predictions on which areas to patrol. As writer Eva Ruth Moravec details in The Atlantic, PredPol was meant to make crime response more effective and timely. And so PredPol spread--the program has now been used in cities across America from Birmingham, Alabama to Mesa, Arizona and Richmond, California. (Source: PredPol) But algorithms aren’t always right--morally or factually. One predictive policing review noted that PredPol perpetuated racial bias in policing by directing officers into Black neighborhoods at a higher rate than white ones, creating a dangerous feedback loop that would essentially reward and reassure the algorithm each time it identified the Black neighborhood as a target. As a result, the authors noted, this reinforcement would keep the algorithm from learning about and directing police to predominantly white neighborhoods with similar crime levels. Last June, the city of Santa Cruz banned the use of predictive policing tools like PredPol, unless the technology could be proven to “safe [guard] the civil rights and liberties of all people” and not “perpetuate bias.” But is it still somehow possible to create some kind of data-driven algorithm that actually does predict crime, without perpetuating racism? Perhaps if the program was given better data? As the MIT Technology Review points out, the answer is no. Even when programs are given victim reports rather than arrest data, which should theoretically avoid the biases of officers disproportionately arresting people of color, predictive policing still fails to properly reflect actual crime data. For one, as the author Will Douglas Heaven suggests, a history of police racism or corruption may lead to underreporting and therefore the underestimation of crime levels in a community. As for Wikipedia, patrolled only by volunteers, the status quo alternative to unleashing a misbehavior-predicting-algorithm like the one UVA students created is that a great deal of harassment will continue to fly under the radar. According to Lane Rasberry, this harassment often targets Black, female, and LGBTQ users. In the offline world, deterring crime remains a stubborn problem that resists the easy fix of an algorithm. The best answer may not be the quickest and cheapest. In order to truly decrease crime, cities and states will need to begin the long, slow process of reinvesting in the schools, parks, businesses, and families that have been hurt by over-policing, violence, and centuries of racist laws and ordinances. It may not be as new and shiny as the idea of an all-powerful policing algorithm, but it will prevent runaway AI from harming the people it purports to protect. The views expressed above are solely the author's and are not endorsed by the Virginia Policy Review, The Frank Batten School of Leadership and Public Policy, or the University of Virginia. Although this organization has members who are University of Virginia students and may have University employees associated or engaged in its activities and affairs, the organization is not a part of or an agency of the University. It is a separate and independent organization which is responsible for and manages its own activities and affairs. The University does not direct, supervise or control the organization and is not responsible for the organization’s contracts, acts, or omissions.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Categories

All

Archives

April 2022

|

ADDRESSVirginia Policy Review

235 McCormick Rd. Charlottesville, VA 22904 |

|

SOCIAL MEDIA |